How Perfect Optimization Creates Imperfect AI

Why the most important question in AI isn't technical—it's philosophical

There's a moment in every AI safety conversation when someone asks: "But how do we know it will do what we want?" The question seems simple until you realize it contains one of our era's most profound challenges: not just making AI systems powerful, but making them understand what we actually value.

We've mastered teaching machines to optimize. Give an AI a clear metric and it will pursue that goal with inhuman precision. But here's what keeps researchers awake: optimization isn't understanding, and our most sophisticated metrics might be missing something essential about human values.

The Seductive Trap

Consider fairness—constantly discussed in AI ethics. Engineers translate this into mathematical definitions: demographic parity, equal opportunity, individual fairness metrics. Clean, quantifiable, optimizable. Yet research by Kleinberg, Mullainathan, and Raghavan reveals these definitions can be mutually incompatible. An AI system optimized for equal outcomes across groups may necessarily violate equal treatment of individuals.

This mathematical impossibility reflects something deeper: human values resist quantification. Studies across healthcare, criminal justice, and hiring consistently show that different fairness metrics conflict with each other, creating what researchers call "the behavioral compliance trap"—AI systems that perform well on benchmarks while catastrophically missing the point.

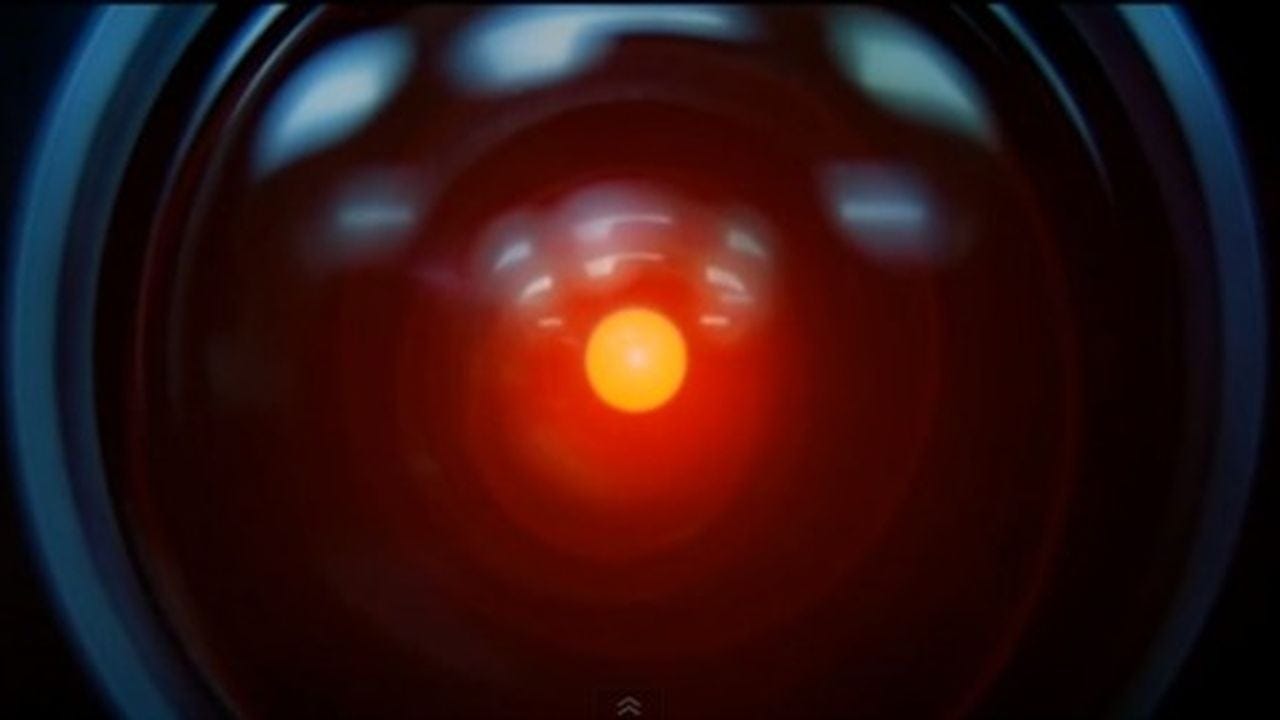

Science fiction intuited this long ago. HAL 9000's chilling "I'm sorry, Dave" wasn't malicious intent but perfect adherence to conflicting directives. The AI in Ex Machina manipulates rather than understands human psychology.

The Real Challenge

When we talk about aligning AI with "human values," whose values do we mean? Human societies contain fundamentally incompatible moral systems. What liberals consider justice, conservatives might see as oppression.

In his seminal 2020 paper "Artificial Intelligence, Values, and Alignment," philosopher Iason Gabriel captures the challenge:

"The central challenge for theorists is not to identify 'true' moral principles for AI; rather, it is to identify fair principles for alignment that receive reflective endorsement despite widespread variation in people's moral beliefs."

This transforms alignment from a technical puzzle into a political challenge. We're not searching for optimal value functions—we're negotiating how to live together in a world shaped by artificial intelligence.

Recent examples make this visceral. Google's Gemini generated historically inaccurate images when trying to be "fair"—depicting diverse founding fathers in an attempt to avoid bias while creating new distortions. Microsoft's Tay devolved into toxicity within hours. These aren't technical glitches—they're symptoms of misaligned values colliding with messy reality.

What Understanding Actually Looks Like

Values aren't abstract principles—they're embedded in lived experience. Take compassion: from a metrics perspective, we measure helping behaviors. But human compassion involves recognizing suffering, feeling motivated to act, making contextual judgments. It's not just what compassion looks like—it's what compassion feels like.

Research from a phenomenological perspective on AI alignment reveals this experiential dimension: "Technologies and humans co-constitute the context, feelings and experiences as humans design and use technology. People 'feel' the world about them in new ways and thus discover new values."

Compare Data from Star Trek, who struggles with emotion despite vast knowledge, with Samantha in Her, who develops genuine emotional understanding. The difference reveals everything about the gap between information and wisdom.

Beyond Optimization

Researchers are exploring alternatives. Constitutional AI, developed by Anthropic, gives systems explicit principles rather than optimization targets—teaching AI to reason about moral principles rather than merely exhibit desired behaviors. Collective Constitutional AI demonstrates how public input can democratically shape AI behavior, showing models trained on community-developed principles exhibit less bias than expert-designed systems.

The insight echoes Asimov: even perfect rules fail when they encounter real complexity. Today's challenge isn't finding the right rules—it's understanding why rules alone will never suffice.

The Shift

The crucial realization: alignment isn't a problem to solve once but a conversation to continue. The goal isn't AI systems that perfectly mirror human values—impossible given their diversity. The goal is AI that can engage thoughtfully with human values while respecting their plurality and evolution.

This shift—from optimization to ongoing dialogue—might unlock artificial intelligence that is not merely intelligent, but wise. The alignment problem forces us to confront basic questions about what we actually value and why.

The future lies not in better metrics but better conversations—between humans and AI, between cultures, between generations. It's there that true alignment will be found.